Four-key Piano on Fipsy FPGA

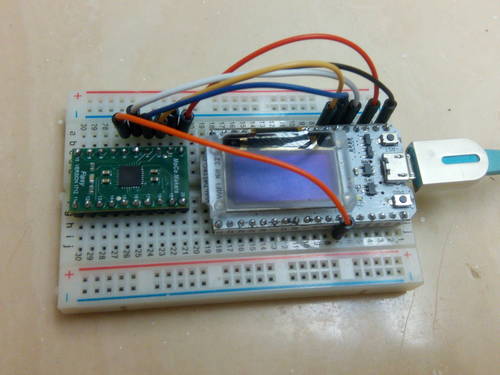

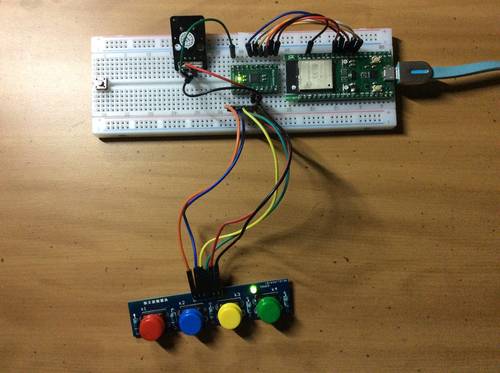

The newest addition to yoursunny.com's toy vault is Fipsy FPGA Breakout Board, a tiny circuit board offering a piece of Lattice MachXO2-256 field-programmable gate array (FPGA). After porting an SPI programmer to ESP32, it's time to write some Verilog! Blinky is boring, but I did it anyway. Then, I'm moving on to better stuff: a piano.

The piano is an acoustic music instrument played using a keyboard. When a key is pressed, a hammer strikes a string, causing it to resonate and produce sound at a certain frequency. A normal piano has 88 keys, and each key has a well-defined sound frequency. My "piano", built on Fipsy, has four keys, and uses a passive buzzer to produce sound.

Play Tone on Passive Buzzer with FPGA

A passive buzzer plays a tone controlled by an oscillating electronic signal at the desired frequency.

In Arduino, the tone() function generates a square wave of a specified frequency, which can be used to control a passive buzzer.